Generative AI Security Issues: What Businesses Should Know

Generative AI tools such as ChatGPT and Google Geminin are an aspect of artificial intelligence that focuses on creating new content such as text, images, or videos. These generative AI tools have gained popularity in recent years across various industries for marketing efforts such as online research, SEO, or Google Analytics 4 data analysis. However, while these tools can boost a company’s productivity, the tools come with significant security concerns that businesses must address to protect their data and overall integrity.

Data Security Breach

When a company uploads a file onto a generative AI tool, the file is stored in the tool’s database. This storage creates potential risks to the company because if the generative AI tool’s database is breached, the file will be leaked and shared with unknown entities that may harm the company. For example, if you’re a data analyst at a non-profit organization and you upload a spreadsheet that contains sensitive donor information onto a generative AI tool, the tool will store the file in their database. If the database is breached one day, the sensitive information will be leaked and will likely cause harm to the donors.

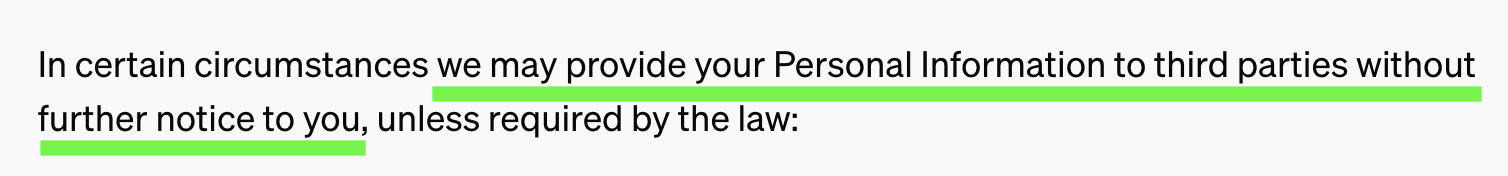

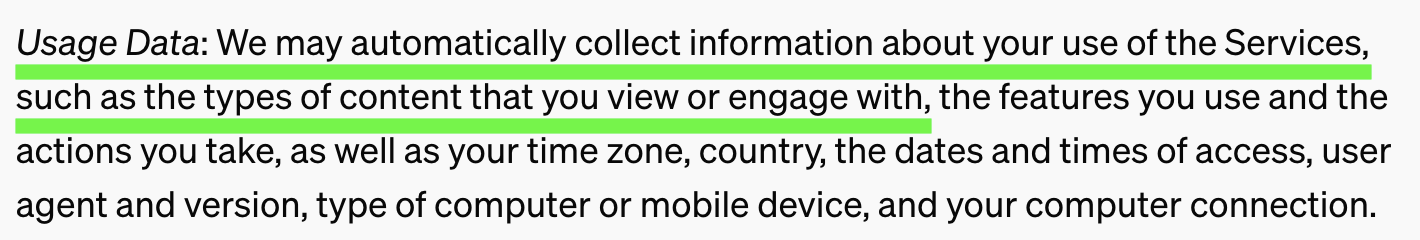

Data Sharing

Generative AI tools, such as ChatGPT and Gemini, can use or share users’ personal information and the input provided by users, often without notifying them. ChatGPT explicitly mentions this in its Privacy Policy.

When personal information is provided to or shared with third-party entities without a company’s knowledge, it could present a security or data risk for the company.

Information Inaccuracies

Outputs or answers provided by generative AI tools, such as ChatGPT, can be false. Generative AI users need to fact-check everything they search for via these tools. ChatGPT’s Terms of Use state that the tool’s output may not always be accurate.

Malicious Manipulation

To expand on the section above, there are bad actors online who aim to manipulate the output that generative AI tools provide. These bad actors may post false information online that generative AI tools might gather and use as part of their responses. For example, an online user might share false information in a Reddit thread or comment, and a generative AI tool could pick up that information and use it as an answer to a prompt. This is dangerous for businesses or anyone using ChatGPT for research.

Generative AI offers immense potential for businesses, but it also introduces a range of security challenges that cannot be overlooked. From data privacy concerns to false information risks, the threats posed by generative AI are diverse and complex. By understanding these risks and implementing robust security measures, businesses can responsibly use the features of generative AI tools while safeguarding their operations, reputation, and customer trust.